Experimenting with data availability metric

Happy New Year, everyone 🎉! Time for some chaos experiments again 😃.

In today's chaos day, I was joined by Pranjal, our newest addition to the reliability testing team at Camunda (welcome 🎉)

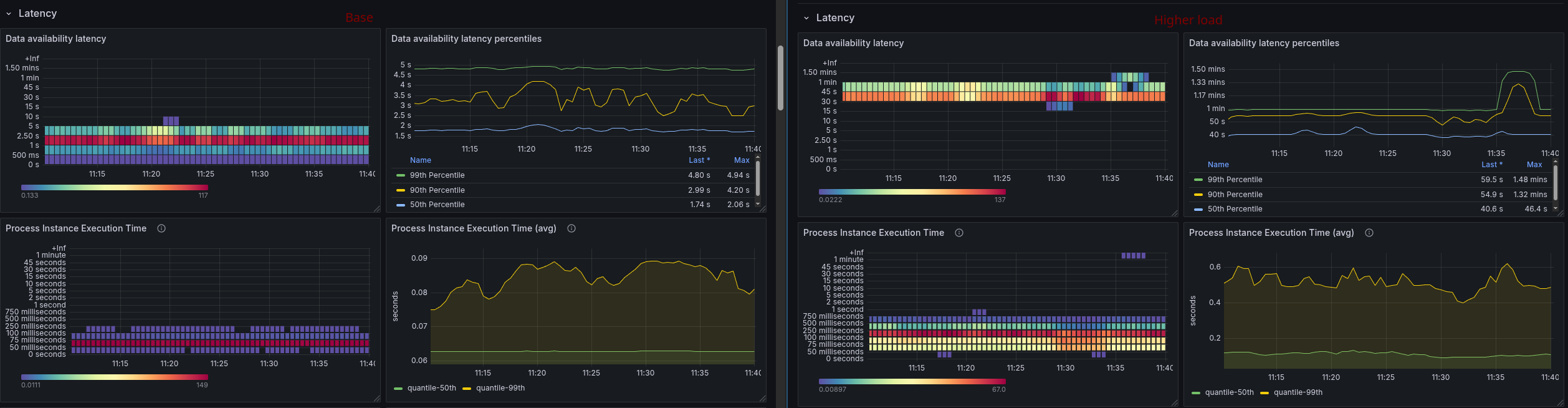

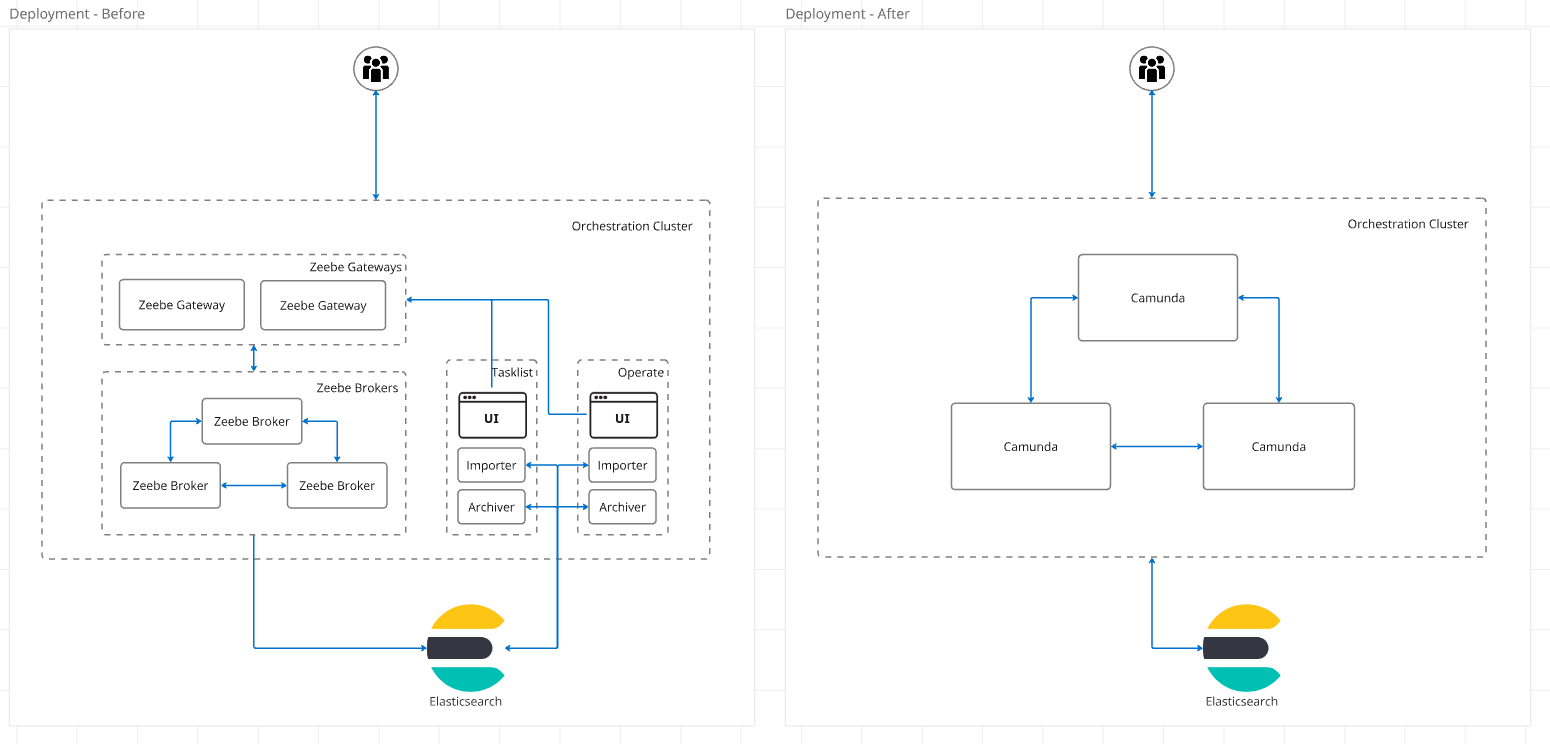

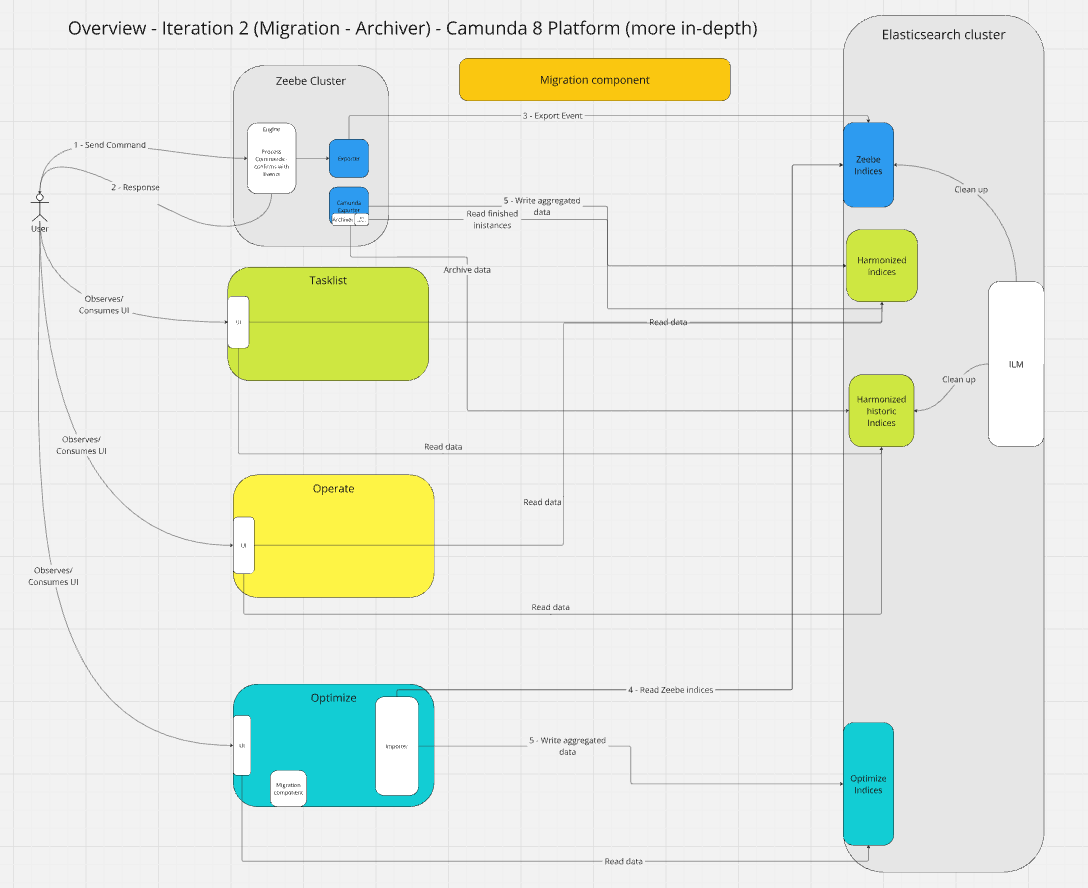

We planned to experiment with the new data availability metric, which we have recently added to our load testing infrastructure, for more details see related PR. In short, we measure the time from creating a process instance until it is actually available to the user via the API. This allows us to reason how long it also takes for Operate to show new data.

The goal for today was to gain a better understanding of how the system behaves under higher loads and how this affects data availability. The focus was set here on the orchestration cluster, meaning data availability for Operate and Tasklist.

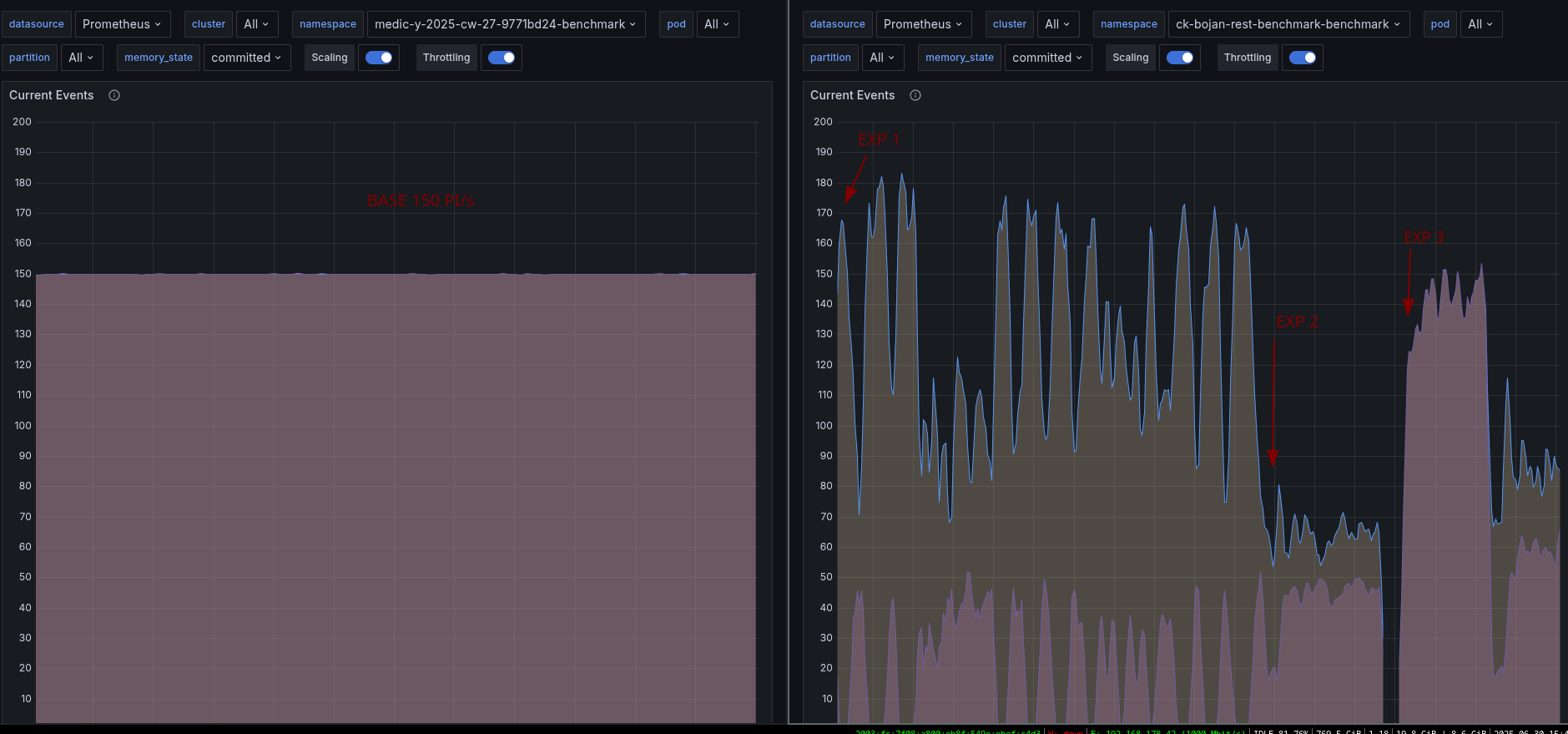

TL;DR: We have observed that increasing the process instance creation rate results in higher data availability times. While experimenting with different workloads, we discovered that the typical load test is still not working well. During our investigation of the platform behaviors, we found a recently introduced regression that is limiting our general maximum throughput. We also identified suboptimal error handling in the Gateway, which causes request retries and can exacerbate load issues.